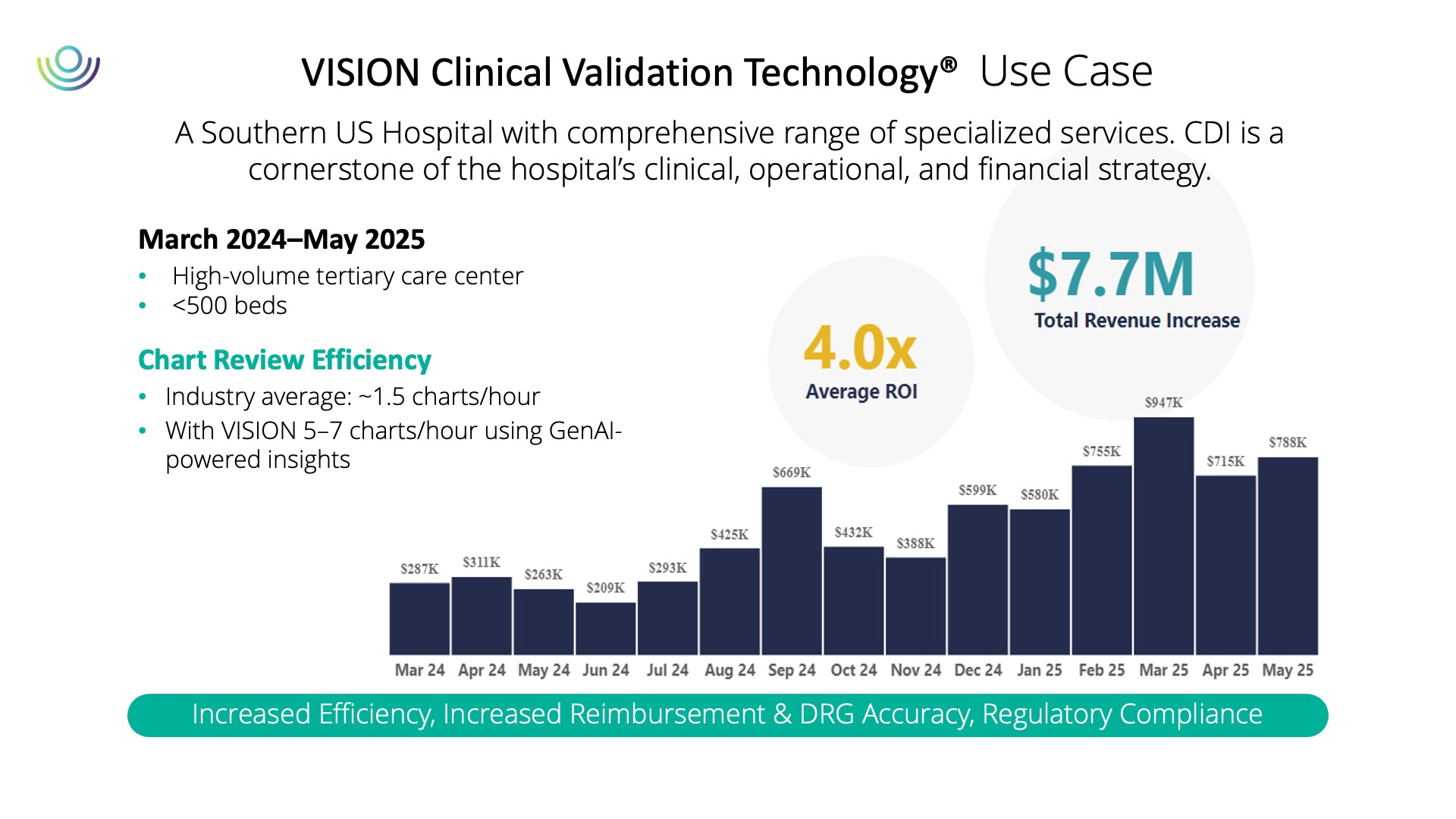

A case from the southern United States illustrates what this looks like in practice. A hospital that integrated AI-driven chart review into its CDI program saw a fourfold increase in revenue opportunities compared to initial coder review. Just as importantly, reviewers were able to move from an industry average of about one and a half charts per hour to five or more. For organizations under constant financial pressure, those kinds of gains are hard to ignore.

Why Privacy and Compliance Cannot Be an Afterthought

The rapid adoption of AI has raised legitimate concerns about compliance. Hospitals must ensure that patient data remains secure and that AI-generated outputs can withstand regulatory scrutiny. Early adopters have responded by running models within private, secure environments such as Azure or AWS, where protected health information is masked before entering the pipeline.

Governance measures have become just as important as technical safeguards. Recommendations are linked back to the clinical chart for transparency. Confidence scores signal where additional human oversight may be needed. Audit trails, version control, and role-based access controls create much-needed accountability. Perhaps most critically, however, teams of clinicians and coders are involved in training and validating outputs, ensuring that accuracy and compliance are not sacrificed for the sake of efficiency.

This layered approach satisfies auditors while also building the trust clinicians need to embrace the technology. Without that trust, even the most sophisticated AI will struggle to gain traction.

Addressing the Human Factor

The technology may be advancing quickly, but adoption ultimately depends on people. CDI specialists worry that AI might make their role obsolete. Physicians are wary of being second-guessed by an algorithm. These are natural reactions to rapid change, but the evidence so far suggests that AI in CDI enhances human expertise, rather than replaces it.

When AI drafts a provider query or flags a missing diagnosis, the final decision still rests with a human reviewer. Specialists are spending less time searching through records and more time validating insights, engaging with providers, and driving quality improvement. Over time, these shifts may even elevate the role of CDI teams, positioning them as strategic advisors on compliance and quality rather than transactional reviewers.

Health systems that have introduced AI successfully emphasize the importance of framing. Positioning AI as a tool that removes repetitive tasks rather than eliminates jobs is essential. Sharing early wins—such as improved denial defense or faster chart turnaround—helps overcome skepticism and spark enthusiasm. Engaging clinicians early and equipping them with training will help reinforce adoption. The message must be clear: AI enriches meaningful work without threatening job security.

Drawing the Line Between AI, GenAI, and Automation

Much of the confusion in these discussions often comes from language. Terms like “AI,” “automation,” and “GenAI” are often used interchangeably, but they represent distinct capabilities. Automation handles repetitive, rule-driven work. Traditional AI, often through NLP, extracts structured elements from text. Generative AI goes further by synthesizing information and producing novel outputs. Recognizing these differences helps leaders set realistic expectations and invest in the right tools for the right problems.

Tackling the Risk of AI’s “Made-Up” Answers

Another common concern is hallucination, meaning the tendency of generative models to produce content that sounds plausible but is inaccurate. In healthcare, the risks are obvious. To address this, leading organizations are building systems where AI outputs are always tied back to verifiable evidence in the chart. Recommendations carry confidence scores that guide reviewers on where to focus attention. And model governance ensures that outputs are tested against coding guidelines and validated by both clinical and compliance experts.

These guardrails do not eliminate the need for human oversight, but they make AI a safer and more reliable partner in the documentation process.

Looking Beyond Inpatient CDI

Thus far, much of the innovation has focused on inpatient settings, where DRG validation and payer scrutiny create clear financial stakes. Yet the future lies in outpatient care and risk adjustment models, especially as value-based payment expands. Generative AI’s ability to parse longitudinal patient histories, reconcile conflicting notes, and suggest risk-adjusting diagnoses makes it a natural fit for this next frontier.

Some health leaders are also looking toward integrative AI, or rather, systems that combine generative reasoning with decision-making logic across multiple domains. Instead of focusing solely on CDI, these platforms could orchestrate tasks across utilization management, revenue cycle, and compliance. The idea of a unified “clinical intelligence layer” may still be emerging, but it represents the direction many organizations are headed.

Measuring What Matters

Ultimately, the success of AI in CDI will be measured, not by novelty, but by outcomes. Key measures include throughput gains, meaning how many additional charts reviewers can handle per hour, and financial impact, from higher reimbursement to fewer denials. Compliance indicators such as audit outcomes and appeal success rates are equally important. Clinical quality, reflected in the accurate capture of comorbidities and patient safety indicators, is another measure of value. And finally, user satisfaction, defined as whether CDI specialists and clinicians feel the tools make their work easier and more effective, may be the most critical measure of all.

By focusing on these tangible outcomes, leaders can separate genuine progress from hype.

The Road Ahead

AI is reshaping clinical documentation improvement by supporting, rather than replacing, human expertise. Hospitals that introduce it thoughtfully and with transparency are beginning to see tangible improvements in efficiency, reimbursement, and compliance. Expanding these gains requires careful attention to how AI is applied and the trust of clinicians and CDI teams.

The key to success lies in seeing AI as part of existing clinical practices, not as a standalone solution. When used this way, it fosters a gradual evolution toward records that more accurately and more fully reflect each patient’s care.